Emotion AI Market by Component (Hardware, Software, and Services), by Technology (Machine Learning, Natural Language Processing, Computer Vision, and Others), by Detection Modality (Facial Expression, Voice & Speech, Text & NLP, and Others) by Deployment Mode (On-premise, Cloud-based, and Hybrid), by Organization Size (Large, Small & Medium), and Others – Global Opportunity Analysis and Industry Forecast, 2024–2030

Overview

The global Emotion AI Market size was valued at USD 3.90 billion in 2024, with an estimation of USD 4.90 billion in 2025 and is predicted to reach USD 15.49 billion by 2030 with a CAGR of 25.87% from 2025-2030.

The emotion AI market growth is rapidly evolving as industries embrace human-centric automation to enhance personalization and emotional intelligence in digital experiences. Emotion AI technologies leveraging facial expression analysis, voice tone, body language, and physiological signals are being widely adopted across sectors like automotive, healthcare, education, and customer service.

Automotive OEMs are integrating in-cabin emotion sensing for safety and regulatory compliance, while healthcare providers are utilizing AI to detect emotional states and support mental health diagnostics. Wearables and extended reality (XR) platforms are also unlocking new use cases, with personalized models achieving over 95% emotion recognition accuracy.

However, privacy concerns and regulatory scrutiny, especially within the EU and US pose significant compliance challenges. The market is broadly segmented by components, technologies, modalities, deployment models, and applications, enabling diverse integration opportunities. With advances in machine learning, NLP, and biosensors, Emotion AI is transitioning from experimental to essential, shaping the next era of empathetic and intelligent human-machine interaction.

Demand for Human-Centric Automation Fuelling Market Expansion

As industries increasingly prioritize personalization and emotional intelligence in digital interactions, the demand for human-centric automation is becoming a key driver. Traditional AI systems are primarily logic- and rule-based, but modern consumers and end-users expect empathetic, context-aware responses especially in sectors such as customer service, healthcare, education, and automotive.

Emotion AI enables machines to recognize, interpret, and respond to human emotions through facial expressions, voice tone, body language, and text sentiment, making automation more intuitive and user-friendly. Thus, sentiment analysis is no longer just a technological upgrade, it is becoming essential for brands and institutions aiming to build trust, improve engagement, and foster meaningful human-machine interactions in the age of digital transformation.

Automotive Industry Leads the Charge in Emotion AI Adoption

The automotive industry is rapidly accelerating the adoption of emotion AI by embedding advanced in cabin sensing and driver-monitoring technologies into production vehicles effectively transforming cars into intelligent, emotionally-aware environments. European regulations mandating driver monitoring systems in new vehicles from July 2024 have propelled OEMs to integrate cameras and AI software that not only detect distraction and drowsiness but also interpret emotional cues such as frustration or surprise.

Smart Eye has secured orders worth SEK 75 million from a major Korean manufacturer for interior sensing systems (covering full-cabin emotion and presence detection), with production set for 2026. Meanwhile by tightly integrating emotion-aware AI into mass-market vehicles and aligning with regulatory and consumer demand, the auto sector is dramatically boosting development and deployment of emotion ai technologies.

Growing Adoption of Emotion AI in Healthcare Industry accelerates its Market demand

The healthcare industry is emerging as a major driver of growth for the market, driven by the increasing need for personalized, empathetic, and tech-enabled patient care. With rising mental health concerns, chronic illness burdens, and the shift toward remote and virtual healthcare, hospitals and digital health platforms are adopting emotion recognition tools to better understand and respond to patients' emotional states.

Emotion AI is being integrated into mental health apps, telemedicine platforms, and eldercare robots, enabling real-time mood analysis, stress detection, and behavioural assessments. Hospitals are also using these technologies to monitor patient discomfort and pain in non-verbal patients, especially in critical care or paediatric settings. AI-driven emotion recognition has shown considerable promise in enhancing patient care by bridging deficiencies in conventional healthcare systems.

Emotion recognition systems utilizing natural language processing and these systems provide real-time monitoring of emotional states, enabling healthcare personnel to proactively respond to patient requirements. As healthcare systems globally invest in AI to enhance diagnostics, therapy, and patient engagement, the adoption of emotionally intelligent systems is expanding rapidly positioning healthcare as a key vertical fueling the growth and innovation in the market.

Regulatory and Privacy Concerns Hinders the Market Growth

Despite its growing adoption across industries, the market faces significant challenges and physiological signals, questions around consent, data ownership, and ethical use have become increasingly prominent.

Regulatory frameworks like the EU’s GDPR and similar data protection laws in other countries impose strict guidelines on how emotional data can be collected, stored, and processed. These regulations create compliance hurdles for developers and limit large-scale deployment, especially in regions with stringent privacy norms. As a result, while the technology holds immense potential, regulatory scrutiny and privacy concerns continue to act as roadblocks to its full-scale market growth.

Integration of Emotion AI in Wearable Products Presents Future Growth Opportunity

The integration of Emotion AI into wearable products such as smartwatches, fitness trackers, and AR/VR headsets presents a significant growth opportunity for the global market. These wearables, equipped with biometric and behavioural sensors, enable real-time emotion detection through facial expressions, voice tone, heart rate variability, and galvanic skin response.

As consumers increasingly seek personalized and emotionally intelligent technology, demand is rising across sectors like healthcare, mental wellness, gaming, and personal productivity. Moreover, wearable-based emotion recognition supports continuous, passive monitoring, offering deeper insights than traditional point-in-time emotion detection methods. This trend is expected to drive innovation and expand the addressable market for Emotion AI vendors in the coming years.

Market Segmentations and Scope of the Study

The emotion AI market report is comprehensively segmented based on several critical dimensions. By component, it includes hardware, software and services. By technology, it spans machine learning, NLP, computer vision, deep learning, and edge computing. By detection modalities, it includes facial expressions, voice & speech, text & NLP, physiological signals, and body movement & gestures. Deployment modes are segmented into cloud-based, on-premise, and hybrid. The market is also categorized by organization size which includes large, small & medium enterprises, and by application areas such as emotion detection & recognition, biometric and behaviour analysis, and others. By region the market is classified into North America, Europe, Asia-Pacific and ROW.

Geographical Analysis

The rising demand for emotionally intelligent and human-centric automation is significantly accelerating the growth of the emotion AI market share in North America, which continues to lead the global landscape. This momentum is largely driven by strong public and private sector investments aimed at integrating AI into real-world, human-facing applications.

For instance, the U.S. government allocated a budget of over $3 billion across agencies to responsibly develop, test, procure, and integrate transformative AI solutions. Complementing this public investment, major technology firms such as OpenAI, Microsoft, and Alphabet Inc. are pushing the boundaries of emotion-aware AI by implementing key strategies.

A notable example is OpenAI’s launch of GPT-4o in May 2024 a model designed to interpret and respond to human emotions with enhanced nuance and empathy. These developments highlight how emotion AI is becoming a core enabler of next-generation, human-centric automation, particularly in applications such as mental health support, customer service, and personalized digital experiences.

Europe is also making steady progress in the Emotion AI space, with a strong emphasis on ethical AI practices and regulatory compliance. The European Union’s proposed Artificial Intelligence Act is paving the way for a responsible and innovation-driven AI ecosystem, especially strengthening applications like in-cabin sensing in the automotive sector.

This regulatory focus on ethical, transparent, and human-centric AI has created a conducive environment for Emotion AI technologies that enhance driver and passenger safety through real-time emotion and behavior monitoring. According to the European Transport Safety Council’s PIN report, the EU-27 achieved a 12% decrease in road fatalities between 2019 and 2024.

In the Asia-Pacific region, the market is expanding rapidly, driven by widespread smartphone adoption and increasing internet penetration both of which signal a strong regional push toward digital transformation and emotionally aware AI systems. According to the Southeast Asia Public Policy Institute, AI adoption could increase the region’s total gross domestic product (GDP) by 13–18%, equating to nearly US$1 trillion by 2030.

China is at the forefront, deploying advanced AI surveillance systems that go beyond facial recognition to include sophisticated “city brains” that combine multiple data streams to monitor urban trends. These systems create a pervasive surveillance network and are reportedly used by state authorities to pre-empt civil unrest. Such regulatory frameworks and technology deployments have positioned China as a leading force in scaling Emotion AI across sectors such as public safety, traffic management, and urban services, making it the principal driver in the Asia-Pacific market.

In the Rest of the World (RoW), the market is growing slowly but steadily, especially in regions such as Latin America, the Middle East, and parts of Africa. These areas are still in the early stages of adopting emotion AI, but interest and investment are gradually increasing. Rising investments in digital infrastructure are laying the groundwork for future Emotion AI applications. Although progress is gradual, these regions hold substantial untapped potential, making them important to the long-term growth of the global Emotion AI.

Strategic Innovations Adopted by Key Players

Key players in the global emotion AI industry are focusing on AI-driven innovations, platform enhancements, and collaborative partnerships to advance automation across underwriting, claims, and fraud detection.

-

In June 2025, Smart eye or Affectiva the world’s leading developer of Driver Monitoring Systems (DMS), announced the launch of AI ONE: a complete DMS built into one compact, self-contained unit. Notably targeting automotive safety, market research, and consumer engagement. Smart Eye’s combined offering includes Affectiva Media Analytics—trusted by top brands and processing millions of videos annually.

-

In May 2025, Tobii AB and Prophesee announced a collaboration to develop next-generation event-based neuromorphic eye-tracking technology for AR/VR and smart eyewear. By combining Tobii’s expertise in eye-tracking with Prophesee’s event-driven vision sensors, the partnership aims to deliver ultra-low-latency, power-efficient tracking that can capture subtle eye movements and visual attention in real time. This advancement is expected to enhance Emotion AI capabilities by enabling devices to infer users’ emotional states and cognitive load through precise, non-verbal visual cues—paving the way for emotionally responsive AR/VR environments.

-

In Oct 2024, Emteq Labs has developed smart glasses equipped with inward-facing sensors that track subtle facial muscle movements and other bio signals to interpret emotional states. These glasses can infer users' moods and intentions in real time. The technology represents a significant advancement in wearable Emotion AI by enabling continuous, real-world emotional tracking.

-

In June 2024, Realeyes introduced a groundbreaking AI-powered heatmap technology that visually maps viewers’ emotional and attentional responses to video content. This Emotion AI innovation enables advertisers to pinpoint which parts of their ads evoke specific emotions—such as joy, confusion, or boredom—at a frame-by-frame level. By merging facial coding and attention metrics, the tool delivers precise emotional analytics to optimize creative performance and audience engagement.

-

In March 2024, Hume AI released Empathic Voice Interface (EVI) in March 2024; this emotionally intelligent AI, trained on millions of human conversations, can detect when users finish speaking, predict their preferences, and tailor vocal responses to improve satisfaction over time.

Key Benefits

-

The report provides quantitative analysis and estimations of the market from 2025 to 2030, which assists in identifying the prevailing industry opportunities.

-

The study comprises a deep-dive analysis of the current and future emotion AI market trends to depict the prevalent investment pockets in the industry.

-

Information related to key drivers, restraints, and opportunities and their impact on the Emotion AI market is provided in the report.

-

Competitive analysis of the key players, along with their market share is provided in the report.

-

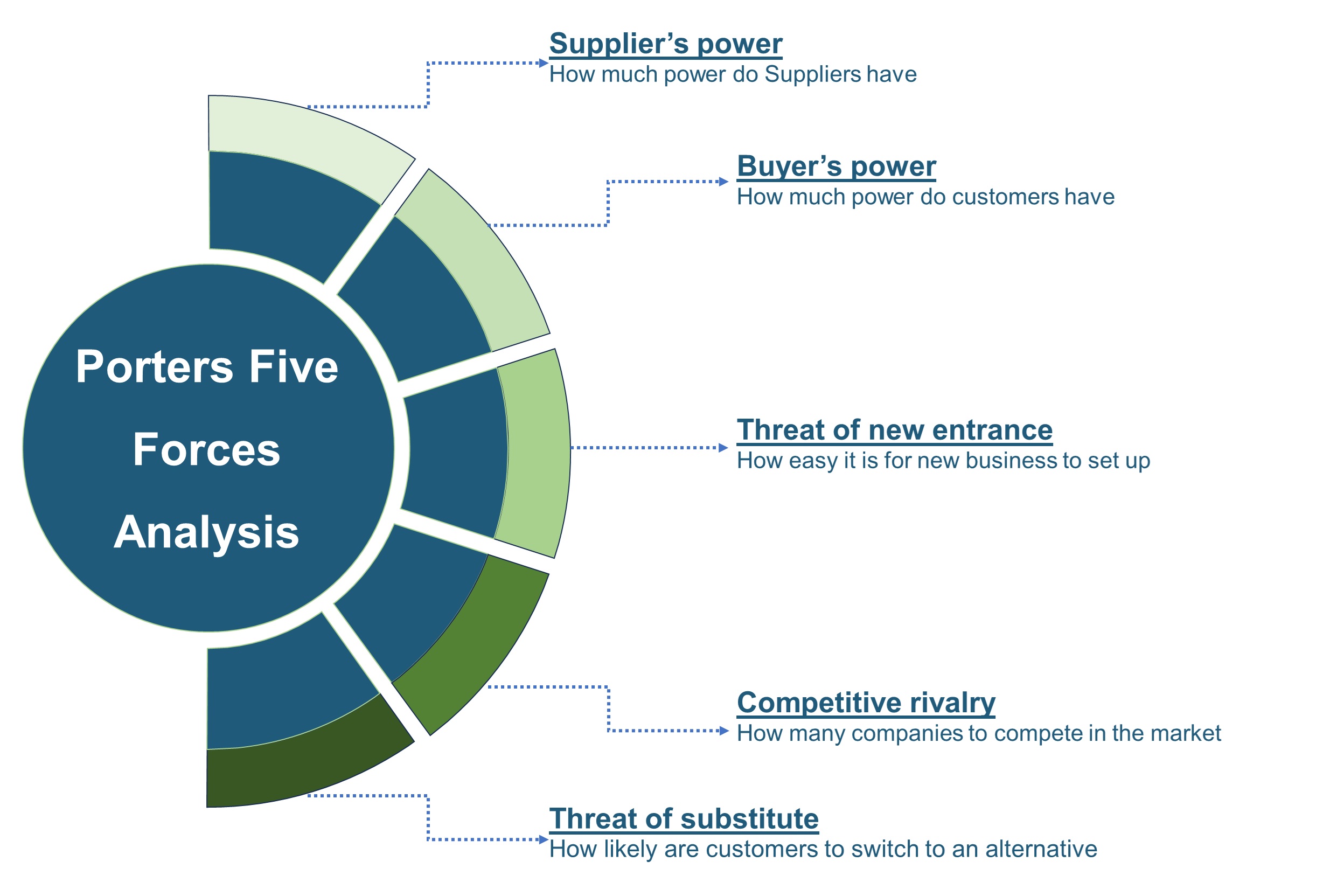

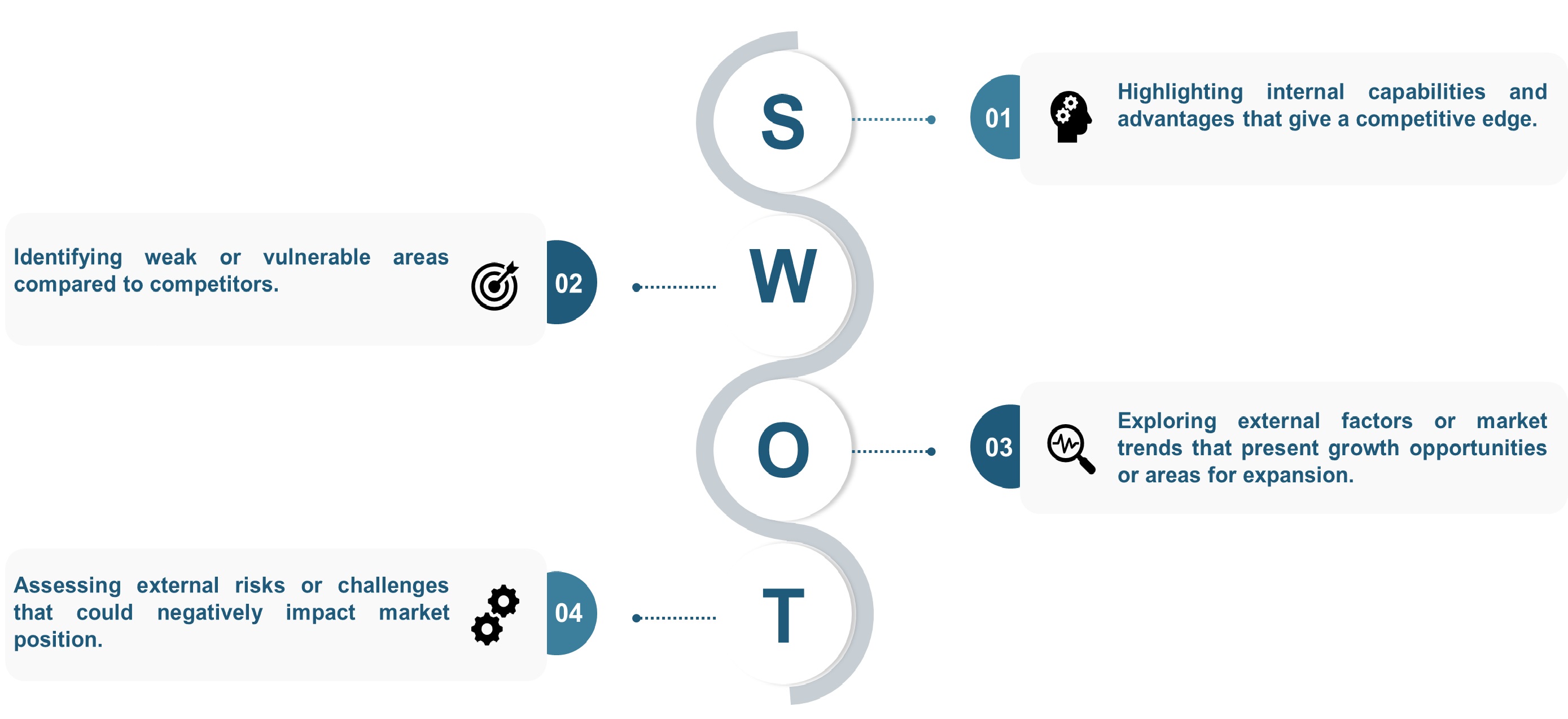

SWOT analysis and Porters Five Forces model is elaborated in the study.

-

Value chain analysis in the market study provides a clear picture of roles of stakeholders.

Emotion AI Market Segments

By Component

-

Hardware

-

Wearable Devices

-

Smart Watches

-

Fitness Bands

-

EEG Headsets

-

-

Cameras

-

Sensors

-

Microphones

-

Others

-

- Software

-

Emotion Recognition Software

-

Facial Expression Recognition Software

-

Speech & Voice Recognition Software

-

Gesture & Posture Recognition Software

-

Natural Language Processing (NLP)

-

-

Services

-

Professional Services

-

Managed Services

-

Integration & Deployment

-

Consulting & Training

-

By Technology

-

Machine Learning (ML)

-

Natural Language Processing (NLP)

-

Computer Vision

-

Deep Learning

-

Edge Computing

By Detection Modality

-

Facial Expression

-

Voice & Speech

-

Text & Natural Language Processing (NLP)

-

Physiological Signals (Heart rate, skin conductivity)

-

Body Movement & Gestures

By Deployment Mode

-

On-premise

-

Cloud-based

-

Hybrid

By Organization Size

-

Large Enterprises

-

Small and Medium Enterprises (SMEs)

By Application

-

Emotion Detection & Recognition

-

Biometric and Behaviour Analysis

-

Surveillance and Monitoring

-

Sentiment Analysis

-

Robotics

-

Human-Computer Interaction

-

Market Research and Advertising

-

Health & Wellness Monitoring

By End-user

-

Healthcare & Life Sciences

-

Automotive & Transportation

-

Consumer Electronics

-

Retail & E-Commerce

-

BFSI (Banking, Financial Services, Insurance)

-

Media & Entertainment

-

IT & Telecom

-

Education

-

Gaming

-

Government & Public Sector

-

Customer Service & Call Centre

-

Others

By Region

-

North America

-

The U.S.

-

Canada

-

Mexico

-

- Europe

-

The UK

-

Germany

-

France

-

Italy

-

Spain

-

Denmark

-

Netherlands

-

Finland

-

Sweden

-

Norway

-

Russia

-

Rest of Europe

-

- Asia-Pacific

-

China

-

Japan

-

India

-

South Korea

-

Australia

-

Indonesia

-

Singapore

-

Taiwan

-

Thailand

-

Rest of Asia-Pacific

-

- RoW

-

Latin America

-

Middle East

-

Africa

-

Key Players

-

IBM

-

Affectiva (Smart Eye)

-

WaveForms AI

-

Eyeris Technologies

-

Nemesysco

-

Emotibot

-

Captemo

-

MorphCast

-

MoodMe

-

Visage Technologies AB

-

Entropik Tech

-

Emteq Labs

-

Hume AI

Report Scope and Segmentation

|

Parameters |

Details |

|

Market Size in 2025 |

USD 4.90 billion |

|

Revenue Forecast in 2030 |

USD 15.49 billion |

|

Growth Rate |

CAGR of 25.87% 2025 to 2030 |

|

Analysis Period |

2024–2030 |

|

Base Year Considered |

2024 |

|

Forecast Period |

2025–2030 |

|

Market Size Estimation |

Billion (USD) |

|

Growth Factors |

|

|

Countries Covered |

28 |

|

Companies Profiled |

15 |

|

Market Share |

Available for 10 companies |

|

Customization Scope |

Free customization (equivalent to up to 80 working hours of analysts) after purchase. Addition or alteration to country, regional, and segment scope. |

|

Pricing and Purchase Options |

Avail customized purchase options to meet your exact research needs. |

Speak to Our Analyst

Speak to Our Analyst