AI Chips 2025: Huawei, Fujitsu-Nvidia, Microsoft Strategies

Published: 2025-10-04

Introduction: Why AI Chips Are the New Battleground

Artificial intelligence (AI) is no longer just about algorithms and software. The true bottleneck today lies in computing power, and at the heart of it are AI chips. These specialized processors are designed to handle the immense workloads of AI models, from training large language models to powering autonomous machines.

In 2025, three major developments highlight how nations and companies are racing to define the future of AI hardware:

Together, these shifts reveal a landscape marked by competition, self-reliance, and sustainability.

Huawei’s “Most Powerful” AI Chip Cluster

Huawei recently unveiled two computing systems—the Atlas 950 SuperPoD and Atlas 960 SuperPoD—which it claims surpass Nvidia’s offerings in computing power, memory, and bandwidth. The company emphasized that these “logical machines” can learn, think, and reason, positioning them as foundational tools for China’s AI future.

Huawei claims the Atlas950 SuperPoD has:

-

56.8x more Neural Processing Units (NPUs)

-

6.7x greater computing power

-

15x larger memory capacity than Nvidia’s NVL144 system

The company also announced its Ascend AI chip series to launch in 2026, part of a broader computing architecture built to meet long-term demand.

The timing is strategic. With the United States restricting exports of Nvidia’s most advanced chips to China, Huawei’s development signals an urgent push for semiconductor self-sufficiency.

Huawei’s SuperPoDs symbolize China’s determination to reduce dependence on U.S. technology while competing directly with Nvidia in raw AI power.

Fujitsu and Nvidia’s Energy-Efficient AI Chip for Japan

In Japan, Fujitsu and Nvidia announced a collaboration to develop an energy-efficient AI chip by 2030. Unlike Huawei’s focus on raw power, this project emphasizes sustainability and sovereignty.

Key features of the partnership:

-

The chip will combine Nvidia GPUs with Fujitsu CPUs, creating hybrid systems optimized for AI workloads.

-

Nvidia’s high-speed interconnect technology will allow multiple chips to function like a single processor.

-

The initiative is closely linked to FugakuNEXT, Japan’s next-generation supercomputer, expected to deliver 5–10x more computing power than its predecessor, Fugaku.

Beyond national projects, Fujitsu aims to apply these chips in commercial AI applications, robotics, and “physical AI” for autonomous machines. The company frames this as a step toward “sovereign AI”, where nations build infrastructure and governance frameworks independently.

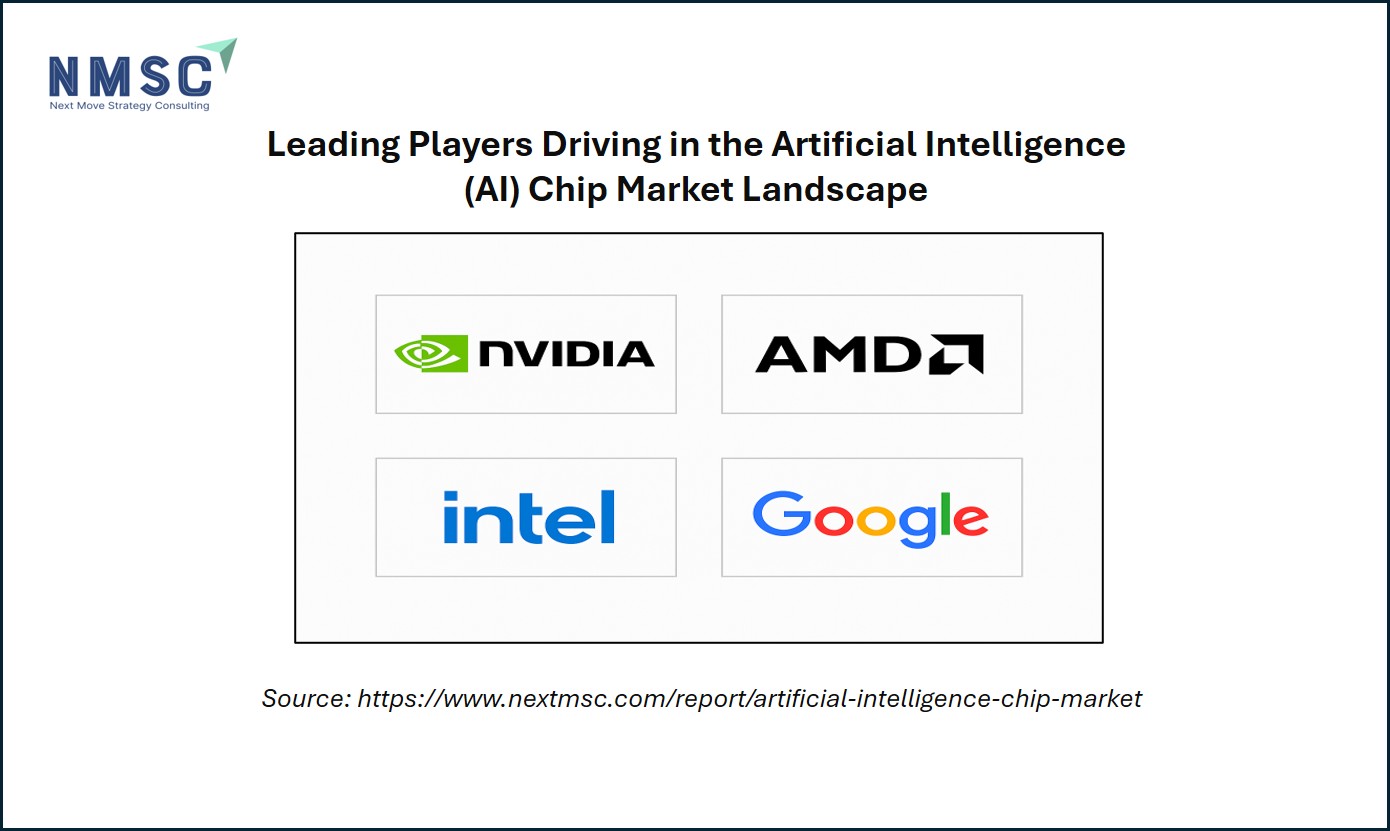

Key Players Shaping the Global AI Chip Industry

The global AI chip industry is highly competitive, with several leading companies driving innovation and market growth.

Prominent players include NVIDIA Corporation, Advanced Micro Devices, Inc. (AMD), Intel Corporation, Google LLC (Alphabet Inc.), Amazon Web Services, Inc. (AWS), Microsoft Corporation, Apple Inc., Qualcomm Incorporated, Huawei Technologies Co., Ltd. (Ascend / HiSilicon), Alibaba Group Holding Ltd. (T-Head / Pingtouge), International Business Machines Corporation (IBM), Tesla, Inc., Samsung Electronics Co., Ltd., Graphcore Limited, Cerebras Systems, Inc., Groq, Inc., SambaNova Systems, Inc., Cambricon Technologies Corporation Limited, Tenstorrent Inc., and MediaTek Inc., among others.

These companies pursue strategies such as new product launches, strategic partnerships, and regional expansions to strengthen their presence and sustain dominance in the global AI chip market.

Microsoft’s Push for Custom AI Chips

While Huawei and Fujitsu highlight national strategies, Microsoft reflects the private sector’s pursuit of control and optimization.

According to Microsoft’s Chief Technology Officer Kevin Scott, the company wants to mainly rely on its own custom AI chips in future data centers. Currently, it uses a mix of Nvidia, AMD, and its own silicon, but growing demand for AI capacity has forced cloud providers to rethink their dependencies.

Key points from Microsoft’s strategy:

-

Microsoft already launched the Azure Maia AI Accelerator and Cobalt CPU in 2023.

-

The firm is working on next-generation chips, alongside new cooling technologies like microfluids to handle overheating.

-

Despite huge capital expenditure—over $300 billion committed by big tech firms including Microsoft, Meta, Amazon, and Alphabet in 2025—demand still outpaces capacity.

Scott called the situation a “massive crunch” in computing, underscoring how even the largest players cannot scale quickly enough to meet AI demand.

Summary: Microsoft’s custom chip strategy aims to reduce reliance on Nvidia and AMD, optimize data center performance, and secure capacity for its AI-heavy ecosystem, particularly its partnership with OpenAI.

Comparative Table: Key AI Chip Developments (2025)

|

Company/Region |

Focus Area |

Highlight |

Long-term Vision |

|

Huawei (China) |

Performance |

Atlas SuperPoDs claims |

Self-sufficiency amid |

|

Fujitsu & Nvidia |

Efficiency |

Hybrid GPU-CPU chip, |

Sustainable, sovereign |

|

Microsoft (U.S.) |

Customization |

Own chips (Maia, Cobalt) + |

Reduce reliance, optimize |

Next Steps: What Businesses and Policymakers Should Do

-

Monitor Global AI Hardware Rivalries: Competition between the U.S., China, and Japan is intensifying. Stakeholders must track how national strategies shape access to computing power.

-

Prioritize Energy Efficiency: With AI models consuming vast amounts of energy, businesses should explore energy-optimized solutions like those being developed by Fujitsu and Nvidia.

-

Diversify Chip Supply Chains: Depending solely on Nvidia or AMD is risky. Companies should evaluate emerging alternatives from Huawei and Microsoft.

-

Invest in System-Level Innovation: As Microsoft emphasized, AI infrastructure is not just about chips but about system design—from cooling to networking.

-

Prepare for Regional AI Sovereignty Models: Governments and enterprises may need to align strategies with evolving frameworks of “sovereign AI”, ensuring compliance and competitiveness.

Next Move Strategy Consulting’s View on the AI Chip Market

According to Next Move Strategy Consulting, the latest announcements by Huawei, Fujitsu-Nvidia, and Microsoft underscore three converging forces in the AI chip market: performance, efficiency, and control. Huawei’s SuperPoDs highlight China’s ambition to secure AI independence through raw computing power, while Fujitsu and Nvidia’s collaboration reflects Japan’s emphasis on sustainable, sovereign AI infrastructure.

Meanwhile, Microsoft’s strategy to prioritize its own chips demonstrates how hyperscale cloud providers are moving toward tighter control and system-level innovation. Looking ahead to 2030, the AI chip market is expected to expand significantly, driven by demand from data centers, robotics, and consumer applications, but challenges such as high production costs, energy demands, and geopolitical restrictions will test resilience. Competitive advantage will rest not only on speed and scale but also on delivering balanced solutions that combine high performance, energy efficiency, and sovereignty.

Conclusion

AI chips are no longer just components; they are geopolitical assets and strategic enablers. Huawei is pushing performance boundaries, Fujitsu and Nvidia are redefining efficiency, and Microsoft is betting on customization for control.

The global AI chip race will continue to reshape technology infrastructure, influence policy, and determine who leads in the era of intelligent machines. For industries and governments alike, the message is clear: mastering AI chips means mastering the future.

About the Author

Tania Dey is an experienced Content Writer specializing in digital transformation and industry-focused insights. She crafts impactful, data-driven content that enhances online visibility, and aligns with emerging market trends. Known for simplifying complex concepts. Tania Dey delivers clear, engaging narratives that empower organizations to stay ahead in a competitive digital landscape.

Tania Dey is an experienced Content Writer specializing in digital transformation and industry-focused insights. She crafts impactful, data-driven content that enhances online visibility, and aligns with emerging market trends. Known for simplifying complex concepts. Tania Dey delivers clear, engaging narratives that empower organizations to stay ahead in a competitive digital landscape.

About the Reviewer

Debashree Dey is a skilled Content Writer, PR Specialist, and Assistant Manager with expertise in digital marketing. She creates impactful, data-driven campaigns and audience-focused content to boost brand visibility. Passionate about creativity, she also draws inspiration from design and innovative projects.

Debashree Dey is a skilled Content Writer, PR Specialist, and Assistant Manager with expertise in digital marketing. She creates impactful, data-driven campaigns and audience-focused content to boost brand visibility. Passionate about creativity, she also draws inspiration from design and innovative projects.

Add Comment